Recently, I’ve begun experimenting with Docker, containerized applications, and cloud-based infrastructure. For those of us interested in hosting a WordPress site on the cloud, the setup, maintenance, and scaling of WordPress can be quite challenging. However, with the use of Docker, Google Cloud, Git, and a simple orchestration method, setting up and maintaining WordPress can be a breeze.

In addition to the ease of setup, containerization significantly reduces the attack surface area. There’s no need to run PHP on the server, no need to mess with Apache, and due to the ephemeral nature of Docker, if the site gets compromised, simply remove the container and start fresh.

There’s a lot to enjoy about setting up a Dockerized WordPress site, but there’s a scattered amount of material on how to do so. Perhaps in a future article, I can go over ways to horizontally scale, but for most, a simple setup of a single node, WordPress, a database, and an Nginx reverse proxy is more than enough.

Step 1: Get a Virtual Machine

Get yourself a cloud VM. Personally, I use the Google Cloud Platform as that is my preference. This guide will focus on their solution, but the ideas are agnostic of your provider and can easily translate to other platforms. Considering that GCP’s documentation is good and there are plenty of online guides for setting up a Compute Engine, I will simply point you there. I will add, at the time of writing, Google does offer an always free tier. Their always free tier includes a micro E2 compute engine which has .25-1vCPU, 1GB of RAM, and 30GB of standard HD storage. For someone just starting out, with very low traffic, this can be a fine solution to get the ball rolling, plus its totally free. Given the incredibly small amount of resources, I’d highly recommend running a very minimal OS (like Ubuntu Minimal) and configuring a swap to at least add some headroom to the whole operation. A good article for setting up a swap can be found here. I’d also suggest tuning the swappiness and vfs_cache_pressure, like the article outlines. The beautiful thing about the cloud is things can easily be upgraded if your website starts gaining traction.

Step 2: Domain names and DNS hosting

Once your compute engine is up, follow Google’s guide to setting a static external IP. If you don’t already have a domain name, now is the time to get one. I personally use Cloudflare as my domain registrar and DNS host. They are a reputable company and offer domain names at cost. Keep in mind that if you purchase a domain name from Cloudflare, they require you to use their DNS hosting. If that is a deal-breaker, you’ll need to look elsewhere. Once you have your domain name and the static IP address of your server, you’ll need to set up two DNS records. An A record, which stands for Address record, and a CNAME record, which stands for Canonical Name record. The A record will point your-domain-name.com to your raw IPv4 address. The CNAME record will point an alias, like the www.your-domain-name.com version of your address, to its canonical name, aka your-domain-name.com, sans the www.

| Type | Name | Content | TTL |

| A | your-domain-name.com | 192.0.2.1 (Put Your External IPV4 address here) | 3600 |

| CNAME | www.your-domain-name.com | your-domain-name.com | 3600 |

Now, if you are using Cloudflare, I’d suggest proxying the traffic through their servers as you can configure extra security on their end. Before setting up the Cloudflare proxy, I like to keep it off to test my server’s certs first (more on TLS and certs later). When you do turn the proxy on, run the SSL/TLS connection in Full Strict Mode.

Step 3: Installing the requisite software

Install Docker, Docker Compose, and Git. The installation process for these applications can vary from operating system to operating system, so I’d suggest visiting their official websites and following their guides for installing on your operating system. In our case, we will use Docker as the tool to containerize our app, Docker Compose as a simple orchestration utility, and Git for managing our wp-contents folder. In a more distributed platform, Kubernetes would be used, but for a single instance on the same host, the ease and lightweight nature of Docker Compose is great.

Step 4: Directory Structure

Once your VM is running and Docker, Docker Compose, and Git are installed, we are ready to set up our working directories. I prefer to set mine up under the home directory. Create the following:

user@host:~$ cd ~/

user@host:~$ mkdir WordPress/

user@host:~$ mkdir WordPress/wp-contents/

user@host:~$ cd WordPress

user@host:~/WordPress$ touch .env

user@host:~/WordPress$ touch docker-compose.yaml

After your directories are set up, set up a repository in GitHub and initialize your Git repo in the WordPress folder. You should keep everything here but the .env file under source control. The .env file is used to set up environment variables, hold database passwords, etc. As such, it is a security risk to have it on GitHub, so keep it local but backed up.

user@host:~/WordPress$ git init

user@host:~/WordPress$ git add .

user@host:~/WordPress$ git commit -am "initial commit"

user@host:~/WordPress$ git remote add origin https://github.com/your_name/your_repo.git

user@host:~/WordPress$ git push -u origin master

Step 5: Creating your containers

Now that our directories, files, and Git repository is created, use your favorite editor and open up the docker-compose.yaml and . env files. Docker Compose uses a YAML file to define services and how you’d like to orchestrate them together (startup, shutdown, networking, dependencies, persistence etc). Paste the following below into the respective files and we will go over the set up.

docker-compose.yaml

services:

# Database

db:

image: mysql:8.3.0

volumes:

- db_data:/var/lib/mysql

restart: always

environment:

MYSQL_ROOT_PASSWORD: "${DB_ROOT_PASSWORD}"

MYSQL_DATABASE: "${DB_NAME}"

MYSQL_USER: "${DB_USER}"

MYSQL_PASSWORD: "${DB_PASSWORD}"

networks:

- wpsite

#WordPress

wordpress:

depends_on:

- db

image: wordpress:latest

ports:

- '8017:80'

restart: always

volumes:

- type: bind

source: /home/user/WordPress/wp-content

target: /var/www/html/wp-content

environment:

WORDPRESS_DB_HOST: db:3306

WORDPRESS_DB_USER: "${DB_USER}"

WORDPRESS_DB_PASSWORD: "${DB_PASSWORD}"

networks:

- wpsite

networks:

wpsite:

volumes:

db_data:

.env file

DB_NAME=wordpress

DB_ROOT_PASSWORD=your_db_root_password

DB_USER=your_db_username

DB_PASSWORD=your_db_password

Now that we have our Docker Compose file and .env set up, let’s unpack what’s going on.

- The services attribute: We are setting up two services, named

dbandwordpress. Ourdbservice will pull down themysql:8.3.0image from Docker Hub. It’s best practice to specify a version of MySQL since not all versions are compatible. Using the latest image can lead to unexpected, breaking changes. If you want to update the version, test before updating your production YAML. Thewordpressservice, on the other hand, should pull down the latest and greatest version, as WordPress continuously makes bug fixes, patches, and updates to its core engine. - The volumes attribute: A volume is a way for Docker to persist data generated by a Docker container even after the container is removed. Since we don’t want the contents of our database to be blown away with the container, it’s important to have Docker persist this. In our configuration, we are creating a named volume called

db_dataand mapping it to the/var/lib/mysqlpath in ourdbservice’s container. By default, a Docker container running a MySQL database will automatically create a volume for storing the data, but the default naming is not very human-readable, so it’s best to define it ourselves. - The restart attribute: This specifies how containers should respond to events or failures. We set ours to

alwaysbecause we want ourdbandwordpressservices to restart automatically if they crash. Docker advises using its built-in restart policies over external process managers for container management. - The environment attribute: By default, Docker Compose will search for a

.envfile in the running directory. In our case, we are using this file to store our database name, username, and passwords, then referencing these variables in our Docker Compose file to initialize the needed environment. By providing these, Docker will create our schema, set our database passwords and usernames, and configure our WordPress container correctly to access our database instance. - The networks attribute: Networks allow containers within the same network to communicate with each other using their service names as hostnames. By default, Docker Compose sets up a single default network that allows your apps to communicate. Although it’s not strictly necessary to create a custom network name, I am creating one called

wpsite. Then, under thewordpressanddbservices, I am connecting each to thewpsitenetwork using their respective network attributes. - The ports attribute: By default, all Docker ports are closed to the outside world. Although this is great from a security perspective, we actually need the host computer to reach our WordPress site and serve it to clients. In order to do this, we need to open up a port. By default, the WordPress Apache server runs on port 80. In the ports section, under the

wordpressservice, we are mapping port 80 to the host computer’s port 8017. The choice of mappings is up to you. It’s worth noting that I am choosing not to expose a database port. Since containers on the same network have access to each other’s ports, the WordPress and db services can still communicate over port 3306, the default MySQL port, but we will not be able to access the db port from the host computer. I’ve chosen to omit a port mapping for db as an added layer of security. However, if you need to connect to the database from the host machine, you will have to expose a port. Bear in mind to never open up this port to the outside world. For our use case, our VM’s firewall should only allow traffic from ports 80, 443, and 22 for HTTP, HTTPS, and SSH traffic, and absolutely nothing else. - The wordpress service’s volumes attribute: If you noticed, we are handling the

wordpressvolumes section slightly differently than the db service. For thewordpressservice, we are bind-mounting thewp-contentsfolder to the/var/www/html/wp-contentfolder inside our container. A bind mount directly maps a directory on the host machine to a directory inside a running container. The mapping has read and write privileges: any changes inside the container reflect on the host. In contrast to a volume, which Docker creates in a Docker storage directory and directly manages the contents, a bind mount needs a complete directory path on the host machine specified and is not managed by Docker in the same way. Since we are using Git to source control this folder, I found this setup to be ideal. The wp-contents folder contains all of our themes, uploads, and plugins. I like to think of it as separate from the WordPress core. It is the location where most people will be making updates and changes, and as such, it should be source-controlled. - The depends_on attribute: Docker Compose provides the ability to control the order in which services are started and shut down. Since our WordPress site depends on the database being up, we want to specify this dependency. Now that we have specified the

wordpress service depends ondb, Docker Compose will ensuredbis started first and shut down last.

Okay so now we are almost ready to rock. Our software is installed, our directories are in place, a git repository has been created, and your compose file is ready to go. Before we fire things up, we need to make a small edit to our docker-compose.yaml file. Remember, our wordpress service is mapping port 80 in the container to port 8017 on host. At the moment port 8017 is not open to the internet due to our firewall settings. Since we want to test our site and WordPress install before proceeding, lets quickly update our config to map port 80 in the container to port 80 on the host (-port 80:80). Make the update, save it, and run the following command.

user@host:~/WordPress$ docker compose up -d

This will pull down the images and start your containers. The -d flag signifies detached mode, meaning your containers will run in the background. Once the containers are up and running, let’s ensure the site is reachable. Open up a browser and type in the IP address of your VM into the search bar. You should be greeted with the WordPress installation wizard. Select your language and follow the prompts. At this point we just want to make sure all our connectivity is good. Once you hit the WordPress dashboard, you’re good to go to the next step.

Step 6: Setting up an NGINX reverse proxy

Great, the web server is reachable, and things are moving along. It’s time to install Nginx, our reverse proxy. We’ll want something sitting in front of our application, handling SSL/TLS, load balancing, caching, etc. There’s a lot of debate about whether Nginx should be containerized; ultimately, the decision is yours. There are compelling arguments either way. I’m opting to install Nginx directly on the server. I find managing certs with Certbot is easier this way. To begin, let’s start by bringing down your containers.

user@host:~/WordPress$ docker compose down

Once the containers are stopped, revert the port mapping for WordPress back to ‘8017:80’. Our Nginx process will be listening on port 80 from now on. Now, restart the container using the same docker-compose up -d command from earlier. To test that WordPress is running on port 8017, run the following command.

user@host:~/WordPress$ docker compose ps

Under the PORTS column, you should see for your WordPress service: 0.0.0.0:8017->80/tcp. That is the correct mapping we are looking for. A quick netcat test can confirm connectivity.

user@host:~/WordPress$ nc -vv localhost 8017

Connection to localhost port 8017 [tcp/*] succeeded!

Now let’s install and configure Nginx. Like earlier, the installation and configuration will vary depending on the flavor of Linux you’re using, so consult the official site for installation instructions. This guide will focus on Ubuntu.

user@host:~$ sudo apt update

user@host:~$ sudo apt install nginx

To configure the proxy, follow the commands below. I like to name my configuration file www.your-domain-name.com.conf, but choose the naming convention you prefer. Remember to use your domain name in place of “your-domain-name”.

user@host:~$ cd /ect/nginx/sites-available

user@host:/ect/nginx/sites-available$ sudo vi www.your-domain-name.com

Paste the following.

server {

root /var/www/html;

listen 80;

listen [::]:80;

server_name yourdomain.com www.your-domain-name.com;

location / {

proxy_pass http://127.0.0.1:8017;

proxy_redirect off;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Host $server_name;

proxy_set_header X-Forwarded-Proto $scheme;

}

}

Now, to enable this configuration, we need to symbolically link it to the sites-enabled directory. Follow the commands below.

user@host:~$ cd /etc/nginx/sites-enabled/

user@host:/etc/nginx/sites-enabled/$ sudo ln -sfn /etc/nginx/sites-available/www.your-domain-name.com www.your-domain-name.com

user@host:/etc/nginx/sites-enabled/$ sudo service nginx restart

At this point, your Nginx server should be up and running. All traffic coming to port 80 should be redirected by Nginx to http://127.0.0.1:8017, which is your WordPress site. Open up a browser, type your domain into the search bar. You should see your website.

Step 7: SSL/TLS and Certificates

Congratulations, you’ve got your site up and running. Now we need to secure it with SSL/TLS by directing all traffic over HTTPS and get proper certificates in place. No one wants to visit your unencrypted and uncertified site, and search engines certainly won’t be promoting it. To do this, we will need to generate legitimate certificates for our website and direct all traffic to port 443. The tool I use for certification is Certbot. It’s a great free open-source utility that leverages the Let’s Encrypt’s CA. It automates the whole process and will automatically renew your certs when they reach expiration. Simply go to https://certbot.eff.org and follow their instructions. BOOM! You’ve got certs, all your traffic is over HTTPS, you’re official. Visit your site once more and verify.

Step 8: Sip your coffee, you’re done!.. except for email

Congratulations, your site is up and running! Take a moment to savor the achievement, sip of your coffee. But before you get too comfortable, we need to chat about… email.

By default, WordPress relies on PHP’s mail function, which in turn uses Linux’s sendmail utility. However, there’s a hitch – this wont work for a multitude of reasons, the main one being GCP blocks all egress traffic on port 25, the standard SMTP port. While this might seem restrictive, it’s actually a security measure. Port 25 is often exploited by spammers and rarely trusted by email servers.

But no worries, there are various WordPress plugins and third party api’s that can help us out. You can bypass this limitation by leveraging WordPress plugins; WP Mail SMTP comes to mind. If you’re serious about email communications, integrating your WordPress site with a reputable third-party email service is highly recommended. Google provides a list of recommended email service providers here, along with valuable insights into how Compute Engines interact with email. Have a look over and choose the right option for you.

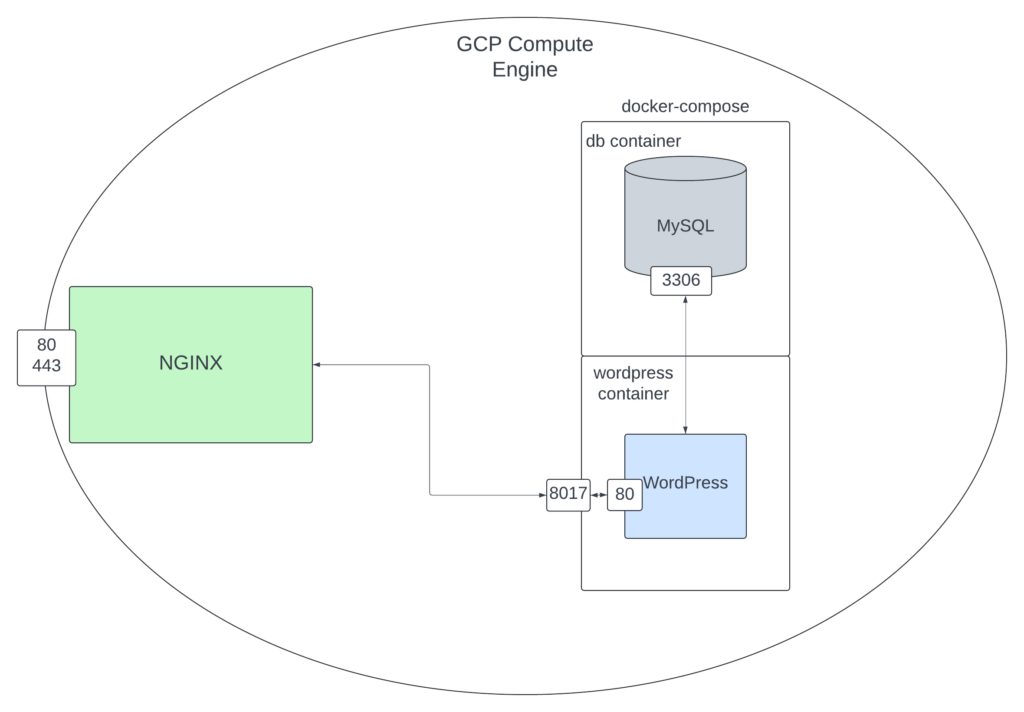

Diagram of setup

Conclusion

Finally, you’re done! You now have a containerized WordPress site running on the cloud. To summarize, we have set up a Compute Engine using GCP, created Docker containers to run our database and WordPress services, placed an Nginx reverse proxy in front of the whole operation, successfully issued certs, and routed all traffic over HTTPS. From this point forward, maintaining and scaling should be a breeze. Set up scheduled backups of the database volume, and anytime you update a plugin or theme, push a commit to git. By following a good maintenance routine, recovery is simple. In the event you need to roll back or something gets compromised, nuke the containers. The entire core is ephemeral due to the nature of Docker. Roll back to a good state or a different version and address any problems. Updates or switching versions is easy as well. Pull down the new images, recycle the containers, and off you go. Moreover, by containerizing our applications, our attack surface area is reduced. There is no database port exposed, even on the local server, no PHP or Apache running locally, all our plugins, themse, and uploads are managed in git, and we get the reliability of Google Cloud’s infrastructure. We are in a good position; obviously, there’s more we can do in terms of security, improvements, scalability, etc., but for starters, this is perfect. I’d also like to point out that local testing and development are now just as simple. Tweak your compose file, pull your wp-contents down, and spin up a local version of the site. In the same vein, switching hosts is also just as easy. Have fun with it. If your site starts picking up traffic, scale up your Compute Engine. At some point, you may want to offload your database to CloudSQL, GCP’s managed cloud database service, or explore the myriad of other offerings GCP can provide. Heck, if things really start to pick up, you may want to move to a more distributed architecture that can respond to the ebbs and flows of your traffic. The sky’s the limit, no pun intended. Either way, this simple setup will serve you well. Most importantly, enjoy the process and the learning along the way!